Interpreting the impact of AI large language models on chemistry

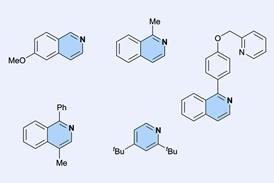

LLMs may outperform Alphafold, but currently struggle to identify simple chemical structures

Is AI on the brink of something massive? That’s been the buzz over the past several months, thanks to the release of improved ‘large language models’ (LLMs) such as OpenAI’s GPT-4, the successor to ChatGPT. Developed as tools for language processing, these algorithms respond so fluently and naturally that some users become convinced they are conversing with a genuine intelligence. Some researchers have suggested that LLMs go beyond traditional deep-learning AI methods by displaying emergent features of the human mind, such as a theory of mind that attributes other agents with autonomy and motives. Others argue that, for all their impressive capabilities, LLMs remain exercises in finding correlations and are devoid not just of sentience but also of any kind of semantic understanding of the world they purport to be talking about – as revealed, for example, in the way LLMs can still make absurd or illogical mistakes or invent false facts. The dangers were illustrated when Bing’s search chatbot Sydney, which incorporated ChatGPT, threatened to kill an Australian researcher and tried to break up the marriage of a New York-based journalist after professing its love.